- public static void fileCopy( File in, File out )

- throws IOException

- {

- FileChannel inChannel = new FileInputStream( in ).getChannel();

- FileChannel outChannel = new FileOutputStream( out ).getChannel();

- try

- {

- // inChannel.transferTo(0, inChannel.size(), outChannel); // original — apparently has trouble copying large files on Windows

- // magic number for Windows, 64Mb – 32Kb)

- int maxCount = (64 * 1024 * 1024) – (32 * 1024);

- long size = inChannel.size();

- long position = 0;

- while ( position < size )

- {

- position += inChannel.transferTo( position, maxCount, outChannel );

- }

- }

- finally

- {

- if ( inChannel != null )

- {

- inChannel.close();

- }

- if ( outChannel != null )

- {

- outChannel.close();

- }

- }

- }

Category: JAVA

列出文件和目录

- File dir = new File(“directoryName”);

- String[] children = dir.list();

- if (children == null) {

- // Either dir does not exist or is not a directory

- } else {

- for (int i=0; i < children.length; i++) {

- // Get filename of file or directory

- String filename = children[i];

- }

- }

- // It is also possible to filter the list of returned files.

- // This example does not return any files that start with `.’.

- FilenameFilter filter = new FilenameFilter() {

- public boolean accept(File dir, String name) {

- return !name.startsWith(“.”);

- }

- };

- children = dir.list(filter);

- // The list of files can also be retrieved as File objects

- File[] files = dir.listFiles();

- // This filter only returns directories

- FileFilter fileFilter = new FileFilter() {

- public boolean accept(File file) {

- return file.isDirectory();

- }

- };

- files = dir.listFiles(fileFilter);

创建ZIP和JAR文件 create zip jar

- import java.util.zip.*;

- import java.io.*;

- public class ZipIt {

- public static void main(String args[]) throws IOException {

- if (args.length < 2) {

- System.err.println(“usage: java ZipIt Zip.zip file1 file2 file3”);

- System.exit(-1);

- }

- File zipFile = new File(args[0]);

- if (zipFile.exists()) {

- System.err.println(“Zip file already exists, please try another”);

- System.exit(-2);

- }

- FileOutputStream fos = new FileOutputStream(zipFile);

- ZipOutputStream zos = new ZipOutputStream(fos);

- int bytesRead;

- byte[] buffer = new byte[1024];

- CRC32 crc = new CRC32();

- for (int i=1, n=args.length; i < n; i++) {

- String name = args[i];

- File file = new File(name);

- if (!file.exists()) {

- System.err.println(“Skipping: “ + name);

- continue;

- }

- BufferedInputStream bis = new BufferedInputStream(

- new FileInputStream(file));

- crc.reset();

- while ((bytesRead = bis.read(buffer)) != –1) {

- crc.update(buffer, 0, bytesRead);

- }

- bis.close();

- // Reset to beginning of input stream

- bis = new BufferedInputStream(

- new FileInputStream(file));

- ZipEntry entry = new ZipEntry(name);

- entry.setMethod(ZipEntry.STORED);

- entry.setCompressedSize(file.length());

- entry.setSize(file.length());

- entry.setCrc(crc.getValue());

- zos.putNextEntry(entry);

- while ((bytesRead = bis.read(buffer)) != –1) {

- zos.write(buffer, 0, bytesRead);

- }

- bis.close();

- }

- zos.close();

- }

- }

解析/读取XML 文件

- <?xml version=“1.0”?>

- <students>

- <student>

- <name>John</name>

- <grade>B</grade>

- <age>12</age>

- </student>

- <student>

- <name>Mary</name>

- <grade>A</grade>

- <age>11</age>

- </student>

- <student>

- <name>Simon</name>

- <grade>A</grade>

- <age>18</age>

- </student>

- </students>

- package net.viralpatel.java.xmlparser;

- import java.io.File;

- import javax.xml.parsers.DocumentBuilder;

- import javax.xml.parsers.DocumentBuilderFactory;

- import org.w3c.dom.Document;

- import org.w3c.dom.Element;

- import org.w3c.dom.Node;

- import org.w3c.dom.NodeList;

- public class XMLParser {

- public void getAllUserNames(String fileName) {

- try {

- DocumentBuilderFactory dbf = DocumentBuilderFactory.newInstance();

- DocumentBuilder db = dbf.newDocumentBuilder();

- File file = new File(fileName);

- if (file.exists()) {

- Document doc = db.parse(file);

- Element docEle = doc.getDocumentElement();

- // Print root element of the document

- System.out.println(“Root element of the document: “

- + docEle.getNodeName());

- NodeList studentList = docEle.getElementsByTagName(“student”);

- // Print total student elements in document

- System.out

- .println(“Total students: “ + studentList.getLength());

- if (studentList != null && studentList.getLength() > 0) {

- for (int i = 0; i < studentList.getLength(); i++) {

- Node node = studentList.item(i);

- if (node.getNodeType() == Node.ELEMENT_NODE) {

- System.out

- .println(“=====================”);

- Element e = (Element) node;

- NodeList nodeList = e.getElementsByTagName(“name”);

- System.out.println(“Name: “

- + nodeList.item(0).getChildNodes().item(0)

- .getNodeValue());

- nodeList = e.getElementsByTagName(“grade”);

- System.out.println(“Grade: “

- + nodeList.item(0).getChildNodes().item(0)

- .getNodeValue());

- nodeList = e.getElementsByTagName(“age”);

- System.out.println(“Age: “

- + nodeList.item(0).getChildNodes().item(0)

- .getNodeValue());

- }

- }

- } else {

- System.exit(1);

- }

- }

- } catch (Exception e) {

- System.out.println(e);

- }

- }

- public static void main(String[] args) {

- XMLParser parser = new XMLParser();

- parser.getAllUserNames(“c:\\test.xml”);

- }

- }

使用iText JAR生成PDF

- import java.io.File;

- import java.io.FileOutputStream;

- import java.io.OutputStream;

- import java.util.Date;

- import com.lowagie.text.Document;

- import com.lowagie.text.Paragraph;

- import com.lowagie.text.pdf.PdfWriter;

- public class GeneratePDF {

- public static void main(String[] args) {

- try {

- OutputStream file = new FileOutputStream(new File(“C:\\Test.pdf”));

- Document document = new Document();

- PdfWriter.getInstance(document, file);

- document.open();

- document.add(new Paragraph(“Hello Kiran”));

- document.add(new Paragraph(new Date().toString()));

- document.close();

- file.close();

- } catch (Exception e) {

- e.printStackTrace();

- }

- }

- }

创建图片的缩略图 createThumbnail

- private void createThumbnail(String filename, int thumbWidth, int thumbHeight, int quality, String outFilename)

- throws InterruptedException, FileNotFoundException, IOException

- {

- // load image from filename

- Image image = Toolkit.getDefaultToolkit().getImage(filename);

- MediaTracker mediaTracker = new MediaTracker(new Container());

- mediaTracker.addImage(image, 0);

- mediaTracker.waitForID(0);

- // use this to test for errors at this point: System.out.println(mediaTracker.isErrorAny());

- // determine thumbnail size from WIDTH and HEIGHT

- double thumbRatio = (double)thumbWidth / (double)thumbHeight;

- int imageWidth = image.getWidth(null);

- int imageHeight = image.getHeight(null);

- double imageRatio = (double)imageWidth / (double)imageHeight;

- if (thumbRatio < imageRatio) {

- thumbHeight = (int)(thumbWidth / imageRatio);

- } else {

- thumbWidth = (int)(thumbHeight * imageRatio);

- }

- // draw original image to thumbnail image object and

- // scale it to the new size on-the-fly

- BufferedImage thumbImage = new BufferedImage(thumbWidth, thumbHeight, BufferedImage.TYPE_INT_RGB);

- Graphics2D graphics2D = thumbImage.createGraphics();

- graphics2D.setRenderingHint(RenderingHints.KEY_INTERPOLATION, RenderingHints.VALUE_INTERPOLATION_BILINEAR);

- graphics2D.drawImage(image, 0, 0, thumbWidth, thumbHeight, null);

- // save thumbnail image to outFilename

- BufferedOutputStream out = new BufferedOutputStream(new FileOutputStream(outFilename));

- JPEGImageEncoder encoder = JPEGCodec.createJPEGEncoder(out);

- JPEGEncodeParam param = encoder.getDefaultJPEGEncodeParam(thumbImage);

- quality = Math.max(0, Math.min(quality, 100));

- param.setQuality((float)quality / 100.0f, false);

- encoder.setJPEGEncodeParam(param);

- encoder.encode(thumbImage);

- out.close();

- }

ElasticSearch 简单入门

简介

ElasticSearch是一个开源的分布式搜索引擎,具备高可靠性,支持非常多的企业级搜索用例。像Solr4一样,是基于Lucene构建的。支持时间时间索引和全文检索。官网:http://www.elasticsearch.org

它对外提供一系列基于java和http的api,用于索引、检索、修改大多数配置。

写这篇博客的的主要原因是ElasticSearch的网站只有一些简单的介绍,质量不高,缺少完整的教程。我费了好大劲才把它启动起来,做了一些比hello world更复杂一些的工作。我希望通过分享我的一些经验来帮助对ElasticSearch(很强大的哦)感兴趣的人在初次使用它的时候能够节省些时间。学完这篇教程,你就掌握了它的基本操作——启动、运行。我将从我的电脑上分享这个链接。

这么着就开始了。

- 作者假设读者拥有安装后的Java。

- 下载来自http://www.elasticsearch.org/download/的ElasticSearch。再一次,关于在Linux与其他非视窗系统环境里操作它的谈论有许多,但是作者更加关心着视窗7版桌面环境。请对应选择安装包裹。对视窗系统 – 一Zip文件 – 用户可解压缩到C:\elasticsearch-0.90.3\. 牢记,这十分的不同于安装Eclipse IDE。

- 作者不熟悉curl跟cygwin,而且作者打算节省掌握时间(此多数在官网ElasticSearch.org应用的命令面对非视窗平台)(译者:大可以安装一虚拟机、便携版Linux或者MinGW)。读者可以在http://curl.haxx.se/download.html和http://cygwin.com/install.html安装Curl和cygwin。

于是测试下目前作者和读者所做到的。

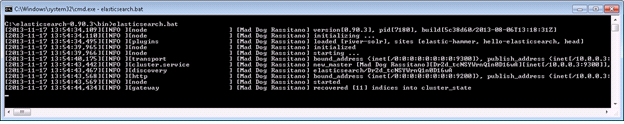

- 视窗7版桌面环境,运行命令行,进入 cd C:\elasticsearch-0.90.3\bin 目录。

- 这时运行 elasticsearch.bat

- 上面在本机启动了一个ElasticSearch节点。 读者会看到下面的记录提示。

(如果您家情况明显不一样,请读者们不要忧愁,因那作者有些个Elastic Search的插件程序,而且作者家节点命名和其它会不同读者家的)

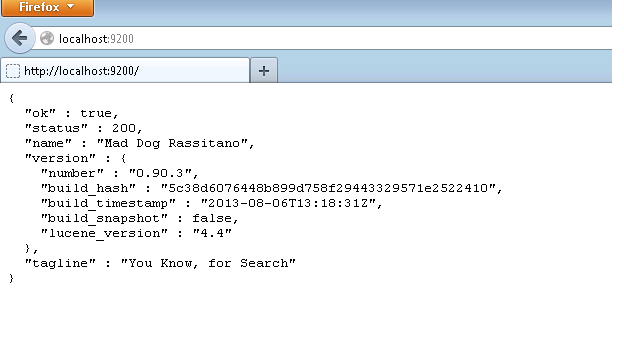

4. 现在在浏览器里测试一下

如果你得到的status是200那它意味着所有的事情都ok啦…是不是很简单?

让我们看看JSON的每个字段代表的含义:

Ok:当为true时,意味着请求成功。

Status:发出请求后的HTTP的错误代码。200表示一切正常。

Name:我们Elasticsearch实例的名字。在默认情况下,它将从一个巨长的名字列表中随机选择一个。

Version:这个对象有一个number字段,代表了当前运行的Elasticsearch版本号,和一个Snapshot_build字段,代表了你当前运行的版本是否是从源代码构建而来。

Tagline:包含了Elasticsearch的第一个tagline: “You Know, for Search.”

5. 现在让我们从http://mobz.github.io/elasticsearch-head/ 安装ElasticSearch Head插件

安装方法非常简单

1 |

cd C:\elasticsearch-0.90.3\bin |

2 |

plugin -install mobz/elasticsearch-head |

上面的命令会把 elasticsearch-head插件装到你的环境里

教程样例

我们将要部署一个非常简单的应用–在一个部门里的雇员–这样我们可以把注意力放在功能而不是氧立得复杂性上。总而言之,这篇博文是为了帮助人们开始ElasticSearch入门。

1)现在打开你的cygwin窗口并且键入命令

1 |

curl -XPUT 'http://localhost:9200/dept/employee/32' -d '{ "empname": "emp32"}' |

dept是一个索引并且索引类型是雇员,此时我们正在输入这个索引类型的第31个id。

你应该能在cygwin的窗口看到这样的信息:

让我们看一下这个输出:

1 |

======================================================================== |

2 |

% Total % Received % Xferd Average Speed Time Time Time Current |

3 |

Dload Upload Total Spent Left Speed |

4 |

100 91 100 70 100 21 448 134 --:--:-- --:--:-- --:--:-- 500{"ok":true,"_index":"dept","_type":"employee","_id":"31","_version":1} |

5 |

======================================================================== |

和上面的命令一样–让我们输入更多的记录:

1 |

curl -XPUT 'http://localhost:9200/dept/employee/1' -d '{ "empname": "emp1"}' |

2 |

curl -XPUT 'http://localhost:9200/dept/employee/2' -d '{ "empname": "emp2"}' |

3 |

... |

4 |

... |

5 |

curl -XPUT 'http://localhost:9200/dept/employee/30' -d '{ "empname": "emp30"}' |

注意:你要记得增加索引计数器和大括号里empname的值。

一旦这些工作都完成了–你为ElasticSearch输入了足够多的数据,你就可以开始使用head插件搜索你的数据了。

让我们试试吧!

在浏览器中输入:

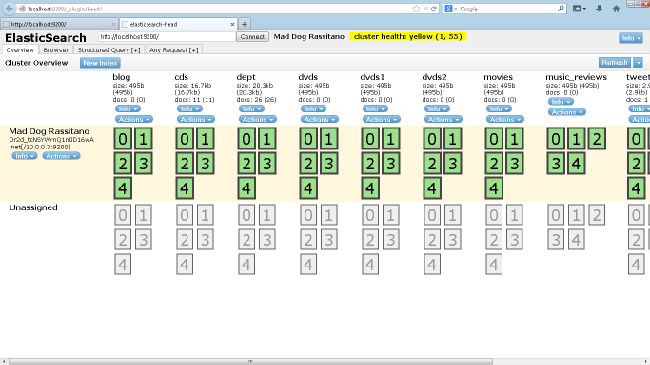

http://localhost:9200/_plugin/head/

你会看到这个:

这里是有关簇使用情况和不同索引信息的概况。我们最近创建的索引在其中,显示为”dept”。

现在点击Structured Query选项卡

在Search下来菜单中选择”dept”并点击”Search”按钮。

这将显示所有记录。

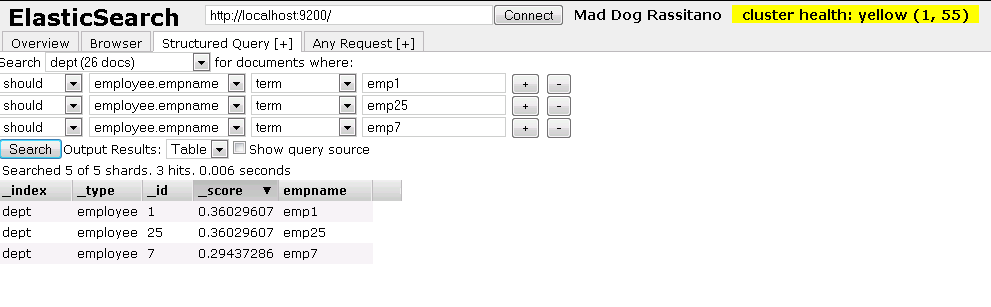

搜索特定条目

让我们来搜索emp1,emp25和emp7。不断点击最右面的”+”来添加更多的搜索项,就像如图显示的那样,之后点击”Search”。确保最左边的选项为”should”,其他的选项也应该和图中的保持一致。

优化了du性能的hadoop 2.8.1

性能提升1000+倍。

原理是使用df 代替du,

wget https://www.strongd.net/dl/hadoop-common-2.8.1.jar -C /usr/local/hadoop-2.8.1/share/hadoop/common/

wget https://www.strongd.net/dl/mydu -C /usr/bin/

chmod a+x /usr/bin/mydu

然后重启hadoop就可以了。

搭建 Hadoop 伪分布式环境

软硬件环境

- CentOS 7.2 64位

- OpenJDK-1.7

- Hadoop-2.7

关于本教程的说明

云实验室云主机自动使用root账户登录系统,因此本教程中所有的操作都是以root用户来执行的。若要在自己的云主机上进行本教程的实验,为了系统安全,建议新建一个账户登录后再进行后续操作。

安装 SSH 客户端

任务时间:1min ~ 5min

安装SSH

安装SSH:

sudo yum install openssh-clients openssh-server

安装完成后,可以使用下面命令进行测试:

ssh localhost

输入root账户的密码,如果可以正常登录,则说明SSH安装没有问题。测试正常后使用exit命令退出ssh。

安装 JAVA 环境

任务时间:5min ~ 10min

安装 JDK

使用yum来安装1.7版本OpenJDK:

sudo yum install java-1.7.0-openjdk java-1.7.0-openjdk-devel

安装完成后,输入java和javac命令,如果能输出对应的命令帮助,则表明jdk已正确安装。

配置 JAVA 环境变量

执行命令:

编辑 ~/.bashrc,在结尾追加:

export JAVA_HOME=/usr/lib/jvm/java-1.7.0-openjdk

保存文件后执行下面命令使JAVA_HOME环境变量生效:

source ~/.bashrc

为了检测系统中JAVA环境是否已经正确配置并生效,可以分别执行下面命令:

java -version

$JAVA_HOME/bin/java -version

若两条命令输出的结果一致,且都为我们前面安装的openjdk-1.7.0的版本,则表明JDK环境已经正确安装并配置。

安装 Hadoop

任务时间:10min ~ 15min

下载 Hadoop

本教程使用hadoop-2.7版本,使用wget工具在线下载(注:本教程是从清华大学的镜像源下载,如果下载失败或报错,可以自己在网上找到国内其他一个镜像源下载2.7版本的hadoop即可):

wget https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-2.7.4/hadoop-2.7.4.tar.gz

安装 Hadoop

将Hadoop安装到/usr/local目录下:

tar -zxf hadoop-2.7.4.tar.gz -C /usr/local

对安装的目录进行重命名,便于后续操作方便:

cd /usr/local

mv ./hadoop-2.7.4/ ./hadoop

检查Hadoop是否已经正确安装:

/usr/local/hadoop/bin/hadoop version

如果成功输出hadoop的版本信息,表明hadoop已经成功安装。

Hadoop 伪分布式环境配置

任务时间:15min ~ 30min

Hadoop伪分布式模式使用多个守护线程模拟分布的伪分布运行模式。

设置 Hadoop 的环境变量

编辑 ~/.bashrc,在结尾追加如下内容:

export HADOOP_HOME=/usr/local/hadoop

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

使Hadoop环境变量配置生效:

source ~/.bashrc

修改 Hadoop 的配置文件

Hadoop的配置文件位于安装目录的/etc/hadoop目录下,在本教程中即位于/url/local/hadoop/etc/hadoop目录下,需要修改的配置文件为如下两个:

/usr/local/hadoop/etc/hadoop/core-site.xml

/usr/local/hadoop/etc/hadoop/hdfs-site.xml

编辑 core-site.xml,修改<configuration></configuration>节点的内容为如下所示:

示例代码:/usr/local/hadoop/etc/hadoop/core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/hadoop/tmp</value>

<description>location to store temporary files</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

同理,编辑 hdfs-site.xml,修改<configuration></configuration>节点的内容为如下所示:

示例代码:/usr/local/hadoop/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/data</value>

</property>

</configuration>

格式化 NameNode

格式化NameNode:

/usr/local/hadoop/bin/hdfs namenode -format

在输出信息中看到如下信息,则表示格式化成功:

Storage directory /usr/local/hadoop/tmp/dfs/name has been successfully formatted.

Exiting with status 0

启动 NameNode 和 DataNode 守护进程

启动NameNode和DataNode进程:

/usr/local/hadoop/sbin/start-dfs.sh

执行过程中会提示输入用户密码,输入root用户密码即可。另外,启动时ssh会显示警告提示是否继续连接,输入yes即可。

检查 NameNode 和 DataNode 是否正常启动:

jps

如果NameNode和DataNode已经正常启动,会显示NameNode、DataNode和SecondaryNameNode的进程信息:

[hadoop@VM_80_152_centos ~]$ jps

3689 SecondaryNameNode

3520 DataNode

3800 Jps

3393 NameNode

运行 Hadoop 伪分布式实例

任务时间:10min ~ 20min

Hadoop自带了丰富的例子,包括 wordcount、grep、sort 等。下面我们将以grep例子为教程,输入一批文件,从中筛选出符合正则表达式dfs[a-z.]+的单词并统计出现的次数。

查看 Hadoop 自带的例子

Hadoop 附带了丰富的例子, 执行下面命令可以查看:

cd /usr/local/hadoop

./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar

在 HDFS 中创建用户目录

在 HDFS 中创建用户目录 hadoop:

/usr/local/hadoop/bin/hdfs dfs -mkdir -p /user/hadoop

准备实验数据

本教程中,我们将以 Hadoop 所有的 xml 配置文件作为输入数据来完成实验。执行下面命令在 HDFS 中新建一个 input 文件夹并将 hadoop 配置文件上传到该文件夹下:

cd /usr/local/hadoop

./bin/hdfs dfs -mkdir /user/hadoop/input

./bin/hdfs dfs -put ./etc/hadoop/*.xml /user/hadoop/input

使用下面命令可以查看刚刚上传到 HDFS 的文件:

/usr/local/hadoop/bin/hdfs dfs -ls /user/hadoop/input

运行实验

运行实验:

cd /usr/local/hadoop

./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.4.jar grep /user/hadoop/input /user/hadoop/output 'dfs[a-z.]+'

上述命令以 HDFS 文件系统中的 input 为输入数据来运行 Hadoop 自带的 grep 程序,提取其中符合正则表达式 dfs[a-z.]+ 的数据并进行次数统计,将结果输出到 HDFS 文件系统的 output 文件夹下。

查看运行结果

上述例子完成后的结果保存在 HDFS 中,通过下面命令查看结果:

/usr/local/hadoop/bin/hdfs dfs -cat /user/hadoop/output/*

如果运行成功,可以看到如下结果:

1 dfsadmin

1 dfs.replication

1 dfs.namenode.name.dir

1 dfs.datanode.data.dir

删除 HDFS 上的输出结果

删除 HDFS 中的结果目录:

/usr/local/hadoop/bin/hdfs dfs -rm -r /user/hadoop/output

运行 Hadoop 程序时,为了防止覆盖结果,程序指定的输出目录不能存在,否则会提示错误,因此在下次运行前需要先删除输出目录。

关闭 Hadoop 进程

关闭 Hadoop 进程:

/usr/local/hadoop/sbin/stop-dfs.sh

再起启动只需要执行下面命令:

/usr/local/hadoop/sbin/start-dfs.sh

部署完成

任务时间:时间未知

大功告成

恭喜您已经完成了搭建 Hadoop 伪分布式环境的学习

Java Reflection, 1000x Faster

A few weeks ago I got to make some of my code 1000 times faster, without changing the underlying complexity! As the title implies, this involved making Java reflection calls faster.

Let me explain my use case as well, because it’s relatively general, and a good example of why one would use reflection in the first place.

I had an interface (representing a tree node) and a slew of classes (100+) implementing this interface. The trick is that the tree is heterogeneous, each node kind can have different numbers of children, or store them differently.

I needed some code te be able to walk over such a composite tree. The simple approach is to simply add a children() method to the interface and implement it in every kind of node. Very tedious, and boilerplaty as hell.

Instead, I noted that all children were either direct fields, or aggregated in fields holding a collection of nodes. I could write a small piece of code that, with reflection, would work for every node kind!

I’ve put up a much simplified version of the code on Github. I will link the relevant parts as we go.

Initial Code

Here is the version I came up with: WalkerDemoSlowest.java

It’s fairly straightforward: get the methods of the node’s class, filter out those that are not getters, then consider only that return either a node or a collection of node. For those, invoke the method, and recursively invoke walk on the children.

Will anyone be surprised if I tell them it’s very slow?

Caching

There is a simple tweak we can apply that makes it much faster however: we can cache the methods lookup.

Here is the caching version: WalkerDemoSlow.java

It’s really the same except that for each class implementing Node, we create aClassData object that caches all the relevant getters, so we only have to look them up once. This produces a satisfying ~10x speedup.

LambdaMetafactory Magic

Unfortunately, this was still way too slow. So I took to Google, which turned out this helpful StackOverflow question.

The accepted answers proposes the use of LambdaMetafactory, a standard library class that supports lambda invocations in the language.

The details are somewhat hazy to me, but it seems that by using these facilities we can “summon the compiler” on our code and optimize the reflective access into a native invocation. That’s the working hypothesis anyhow.

Here is the code: WalkerDemoFast.java

Now, in my code, this worked wonders, unlocking another 100x speedup. While writing this article however, I wanted to demonstrate the effect with some code snippet, but didn’t manage to. I tried to give the interface three sub-classes, and to give them bogus methods to be filtered out, to no avail. The second and third version of the code would run at about the same speed.

I re-checked the original code — all seemed good. In my original code, the trees are Abstract Syntax Trees (AST) derived by parsing some source files. After fooling around some more, I noticed different results if I limited the input to the first 14 source files.

These files are relatively short (few 10s of lines) and syntactically simple. With only those, the second and third version would run at about the same speed. But add in the 15th file (a few 100s of lines) and the second version would take a whopping 36 seconds while the third version would still complete in 0.2 seconds, a ~700x difference.

My (somewhat shaky) hypothesis is that if the scenario is simple enough, the optimizer notices what you are doing and optmizes away. In more complex cases, it exhausts its optimization budget and falls back on the unoptimized version and its abysmal performance. But the optimizer is devious enough that crafting a toy example that would defeat it seems to be quite the feat.

LambdaMetafactory Possibilities

I’m somewhat intrigued about what is possible with LambdaMetafactory. In my use case, it works wonders because reflection calls are much more expensive than a simple cache lookup. But could it be used to optmize regular code in pathological cases as well? It seems unlikely to help with megamorphic call sites, because the compiled method handle has to be retrieved somehow, and the cost of that lookup would dwarf the gains.

But what about piecing together code at run time, and optimizing it? In particular, one could supply a data structure and an interpreter for that data structure, and “compile” them together using LambdaMetafactory. Would it be smart enough to partially evaluate the code given the data structure, and so turn your interpreter into the equivalent “plain” code?

Incidentally, that is exactly the approach taken by the Truffle framework, which runs on top of the Graal VM, so there is definitely something to the idea. Maybe something precludes it with the current JVM, hence requiring the GraalVM modification?

In any case, there is something to be said in favor of making these capabilities available as a library, which could be used in “regular programs” (i.e. not compilers). Writing a simple interpreter is often the easiest approach to some problems.